-

Notifications

You must be signed in to change notification settings - Fork 861

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add GPU support #346

Add GPU support #346

Conversation

Codecov Report

@@ Coverage Diff @@

## main #346 +/- ##

==========================================

- Coverage 83.97% 83.61% -0.37%

==========================================

Files 8 8

Lines 1604 1605 +1

==========================================

- Hits 1347 1342 -5

- Misses 257 263 +6

Flags with carried forward coverage won't be shown. Click here to find out more.

📣 We’re building smart automated test selection to slash your CI/CD build times. Learn more |

|

Does this build use the GPU for you? https://github.com/chidiwilliams/buzz/actions/runs/4062599632 |

Hello Chidi. I'd like to test it for you as I am so excited about your project but not sure how to access this version you've provided. If there is a test version I'd be happy to try it. But not sure how to via GitHub. Merci. |

|

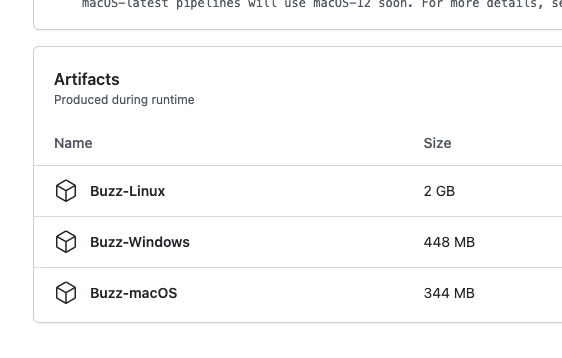

@Sircam19 You'll find this at the bottom of the page I shared: |

Hello Chidi. Thank you, obviously too little sleep on my part and missed scrolling all the way down. Anyway, I gave it a try and it failed immediately. Here is the screenshot, note that last two attempts (i.e. the others are history from days back). The first was with the language recognition setting enabled and the second fail was with language set specifically to French). Happy to send other information to help with debugging... Merci. S |

|

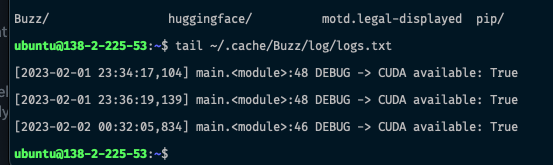

Thanks, @Sircam19. Could you share the logs from |

I also just installed Buzz on my non M1 Mac Mini and got the same type of result. |

Here you go.... Logs[2022-12-01 18:01:30,039] whispr.get_model_path:118 DEBUG -> Loading model = tiny, whisper.cpp = False |

|

@Sircam19, thanks for the logs. I also just caught that you're using a Mac. Whisper only supports CUDA-enabled GPUs at the moment. Support for Mac MPS GPUs has not been merged yet: openai/whisper#382. |

|

Running with PyTorch version NVIDIA A10 with CUDA capability sm_86 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_37 sm_50 sm_60 sm_70.

If you want to use the NVIDIA A10 GPU with PyTorch, please check the instructions at https://pytorch.org/get-started/locally/

warnings.warn(incompatible_device_warn.format(device_name, capability, " ".join(arch_list), device_name))

Traceback (most recent call last):

File "main.py", line 50, in <module>

File "whisper/__init__.py", line 120, in load_model

File "torch/nn/modules/module.py", line 1604, in load_state_dict

RuntimeError: Error(s) in loading state_dict for Whisper:

While copying the parameter named "encoder.blocks.0.attn.query.weight", whose dimensions in the model are torch.Size([384, 384]) and whose dimensions in the checkpoint are torch.Size([384, 384]), an exception occurred : ('CUDA error: no kernel image is available for execution on the device\nCUDA kernel errors mMeanwhile, upgrading PyTorch to version I've already spent a lot of time trying to make this work, and unfortunately, I think I'll have to stop here for now. I'll continue if I can find any information on importing PyTorch 1.13.1 into a PyInstaller project. |

|

Totally understand and is appreciated. Thanks for trying and your tool is still so useful great combination of accuracy for my purposes. THANK YOU. |

No description provided.