-

-

Notifications

You must be signed in to change notification settings - Fork 381

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Pre-train badger on popular sites #1947

Conversation

Add a seed.json file, which contains the action_map and snitch_map that the Badger learns after visiting the top 1000 sites in the Majestic Million. Add code in background.js to load it on first run. Remove redundant check for incognito mode in background initialization.

Since Privacy Badger runs in "spanning" incognito mode, chrome.extension.inIncognitoContext should never return true when called from the background page. Remove that check.

|

This broke the assumptions behind a few of the selenium tests -- working on getting those fixed now. |

Add resetStoredSiteData function to BadgerPen prototype, which clears learned action_map and snitch_map. Update selenium tests to call this where necessary in order to pass.

|

Should we also resolve #971 by exposing a reset option somewhere in the UI (and then have tests click that button instead of running JS)? |

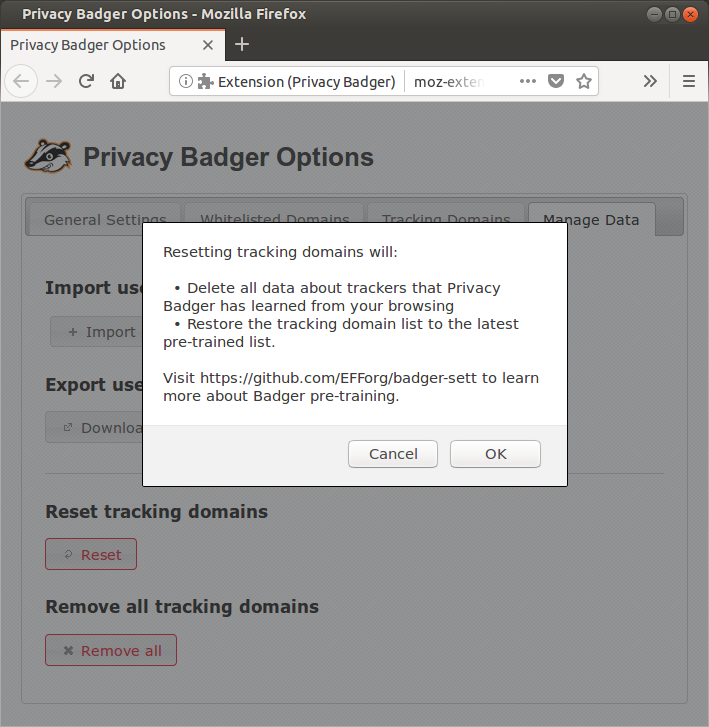

Add buttons which (1) reset the tracker lists to their default state and (2) clear the lists entirely, respectively, to the options page.

|

It seems easier to have the tests call the javascript directly. Clicking the reset button refreshes the page, which requires sleeping for a while to make sure other things don't break. I'll add a separate test in |

|

Where does the crawling script live? Shall we review the script together with this PR? How will we maintain the seed database to start with? Have the crawling script commit the latest database to its own repository daily, and add a Makefile task to update our definitions from that repository? We should document this step in our release process checklist. |

|

I've been playing around with training mine on a list from quantcast referenced in My thoughts are still to maintain it by wiping the whole thing every time or after a certain timeframe. Otherwise old domains will collect. I'd also do more than 1,000. Like if I loaded 1 page at a time giving 5 seconds on each page it could run through 10,000 in half a day. 1 million sites would take 57 days so I'm not suggesting that. I also disabled images,webgl, webassembly, downloadable fonts, rtc, serviceworkers, and a few other things to save on bandwidth and computer resources. After trying 100 or so if the top sites it still felt like I didn't see as many reds as I thought I'd get. Like maybe a few hundred. I also ran Firefox Lightbeam during it and that was pretty nasty. It would be interesting to run Lightbeam during the training, get a screenshot of that mess, and then run it after the training to see if PB makes a difference. And total download sizes too. |

|

Current observed behaviour:

|

|

We should improve the message when the user clicks on "Reset browsing data" to explain that the data to be restored is the pre-built list. It's not exactly clear yet. |

|

The crawling script is here: https://github.com/EFForg/badger-sett

@jawz101 The script will run from scratch each time, so every (day|week|however often we run it) the seed data will be completely refreshed. Is this what you mean? This won't affect users after they download the extension unless they choose to click the "reset" button -- if you install PB now, then never reset your tracker list, you might have an out-of-date action map next year. But that's already the way things are. Also, nice idea with Lightbeam! I want to try that myself and see what happens. It would be really cool to have some visual representations of how much Privacy Badger is doing.

@andresbase This is not the expected behavior. After step 11, only the pre-trained data should be present. Thanks for the catch, I'll look into it. I'll tinker with the notification language as well. |

|

@bcyphers yeah that's what I mean. Here's an example of 25 sites I'd tested in the past comparing Firefox's Tracking Protection, Privacy Badger, and uBlock Origin in default and "medium mode." Kinda interesting to play with but it was alarming to get the visualization. As much as I love the idea of Privacy Badger it has much to be desired. I'm hoping this sort've commit will prime the pump so PB can compete. With uBlock Origin in Medium Mode (blocking 3rd party scripts and frames by default) I get nearly complete separation. I've also drawn up something crude in the past which I thought might be neat. |

|

@jawz101 I think Lightbeam shows all third-parties without taking into account tracking. At least last time I checked I couldn't see tracking in some of the ones shown, but it's worth double checking. |

|

fwiw I trained mine last night on about 1300 sites before I stopped just to see what I could see I exported the Lightbeam export file as well. I didn't take a screenshot of the visualization but imagine not seeing any of the black background in the Lightbeam screen. It was just the biggest mess in the world. (had to upload the json files as txt files for it to upload) lightbeamData.json.txt |

Add test_reset to options_test.py to test clicking on the 'reset data' and 'clear all' buttons in the options page.

6023c18

to

b6a2f30

Compare

Add calls to `clear_seed_data()` at the start of the new test_tracking_user_overwrite_* Selenium tests.

src/data/seed.json

Outdated

| }, | ||

| "casalemedia.com": { | ||

| "dnt": false, | ||

| "h |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: Could we get rid of trailing spaces?

At E&D suggestion, add a red border to "dangerous" buttons and make the background red brighter.

|

@ghostwords Looks like everywhere we use |

|

Releasing this is blocked by #1972, I think. See #1947 (comment) and EFForg/badger-sett#17. |

|

Feedback from several users on this layout: Split in Bullet points:RESET

REMOVE ALL

|

chromedriver is fast enough to sometimes load the options page before Badger finishes fetching the pre-trained database from disk.

|

@andresbase @bcyphers How do these look? |

|

LGTM |

|

Good idea, let's use a redirect URL we control. |

|

Regarding #1972, another way it crops up is that Since |

Conflicts: tests/selenium/options_test.py Conflicts caused by 1f805af.

Don't fall back to boolean testing when a custom tester is present.

Need to reload the test page too when retrying since tabData doesn't get updated without a reload.

| utils.xhrRequest(constants.SEED_DATA_LOCAL_URL, function(err, response) { | ||

| if (!err) { | ||

| var seed = JSON.parse(response); | ||

| self.storage.getBadgerStorageObject("action_map").merge(seed.action_map); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we use badger.mergeUserData here instead?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe -- there will be the extra "version" key in the imported json, can the function handle that?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(yes, it can, and yes, we should.)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good! I'm going to merge so I we can get started on updating translations. If we want to do anything about #1947 (comment), let's do a followup PR.

- Use pre-trained tracker data in new Privacy Badger installations. - Add buttons to reset/clear tracker data.

Closes #1891, closes #971 and partially addresses #1019, #1299, #1374.

This PR includes a static JSON file with the

snitch_mapandaction_mapresulting from visiting the top 1,000 sites of the Majestic million (in order). On first run, the background page populates the user's action_map and snitch_map with those pre-trained values.It's not opt-in (or even opt-out, for now) because it seems to me that there is no downside to starting off with this training data. We might want to add a "clear data" or "reset Privacy Badger" option so that users or researchers can start with a totally blank slate if they choose, but 99% of users should never have to think about that.

The script for doing the crawling will go in a separate repo. We should probably set it up to run every week or so and set PB to pull down new versions every so often, but for now, we can just update the static file in this repository before new releases.

We probably also need to update parts of the FAQ and first-run flow to explain some of this, and the things explaining that "Privacy badger won't block things right away" can be removed. This PR is not meant to be merged in yet.

Please give feedback! Is this the right way to go about the training data? How should we update it? What about the UI should change, if anything?

Edit: The crawling script is here: https://github.com/EFForg/badger-sett