-

-

Notifications

You must be signed in to change notification settings - Fork 1

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Which computers will we use to crawl? #69

Comments

|

I just tested the crawler on my own mac (using newly merged main branch). The instructions are really easy to follow and local sql database can be easily set up. I crawled the validation set Kate mentioned above. The crawler ran very well and didn't crash at all. I played around with it and found that the crawler was able to automatically restart even I closed the window. Result: I will update Mac Mini results shortly. |

|

I finished testing on Mac Mini as well. Looking at the terminal output, the crawler crashes several times but was robust enough to restart each time. Result: |

|

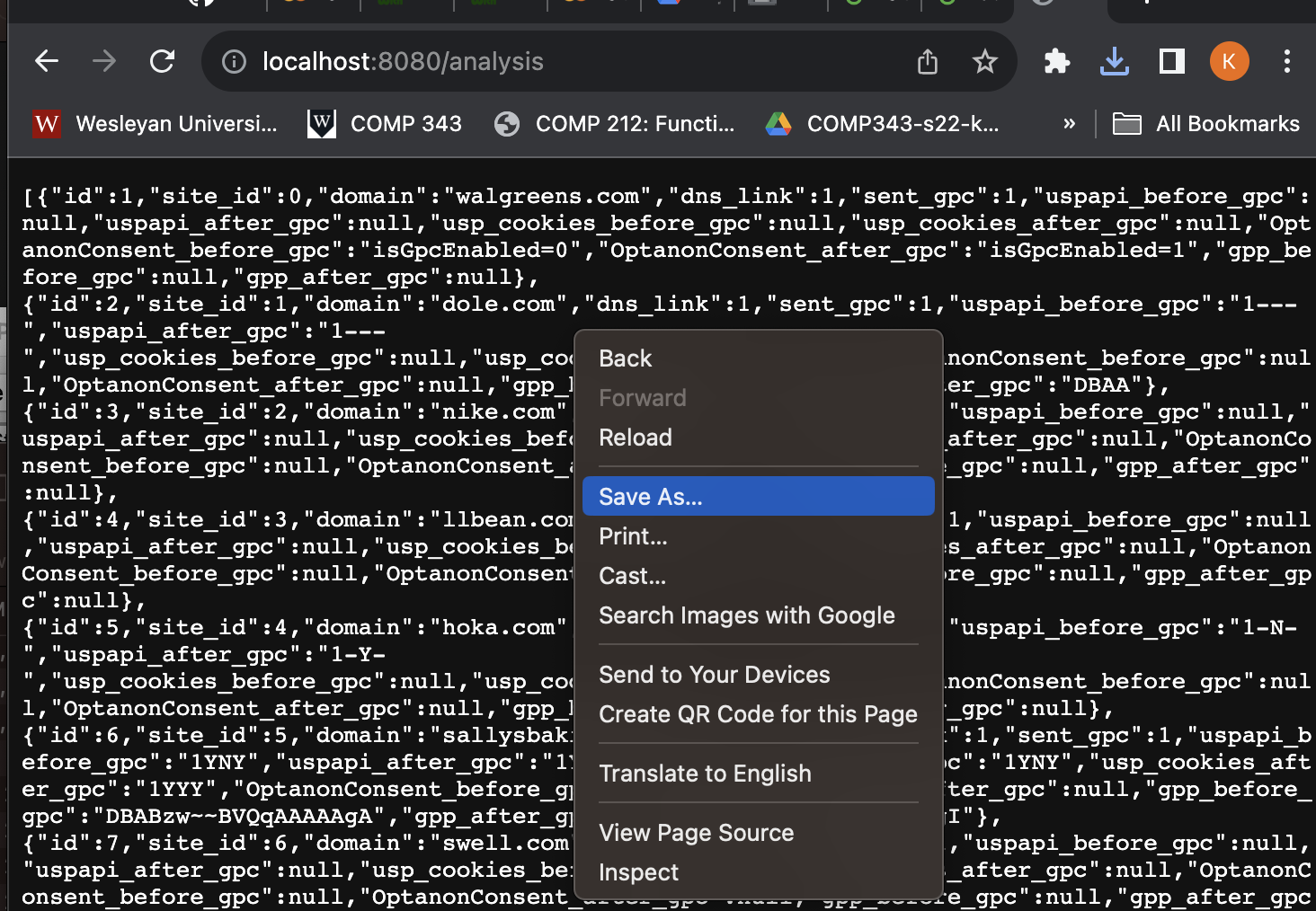

Could you save the database data from both crawls as a json? One way to do this is just go to http://localhost:8080/analysis, right click, select save as, and save it as a json. Then we can compare the crawl data to the ground truths. You can just put the files here. |

@katehausladen should be completed now :) |

|

We will be using Sebastian's old computer. |

Back in May in issue #37, we discussed which computers we would use for our crawl but never really came to a concrete conclusion:

Since crawling 10,000 sites will take multiple days to complete, I think it would probably be best if we did not use our own computers. I think now would be a good time to decide whether we should get new Mac minis or if the current ones will work.

Some things to consider would be:

@Jocelyn0830, if you could do a small crawl on one of the Mac minis (assuming Professor Danner is not actively using both of them), that would be great. You can use this validation set (sites + Ground Truths). You can either run the crawl on your mac to compare or just compare the mac mini results to the results I got on my Mac. The run I did used the VPN, took 1601 seconds, and had no errors logged in error-logging.json. This google colab will help with the comparison.

If Oliver hasn't merged the issue-60 branch by the time you get to this, run the crawl from the issue-60 branch. The sql db creation command is in the PR.

The text was updated successfully, but these errors were encountered: