-

Notifications

You must be signed in to change notification settings - Fork 35

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

unable to pull secrets or registry auth: pull command failed #290

Comments

|

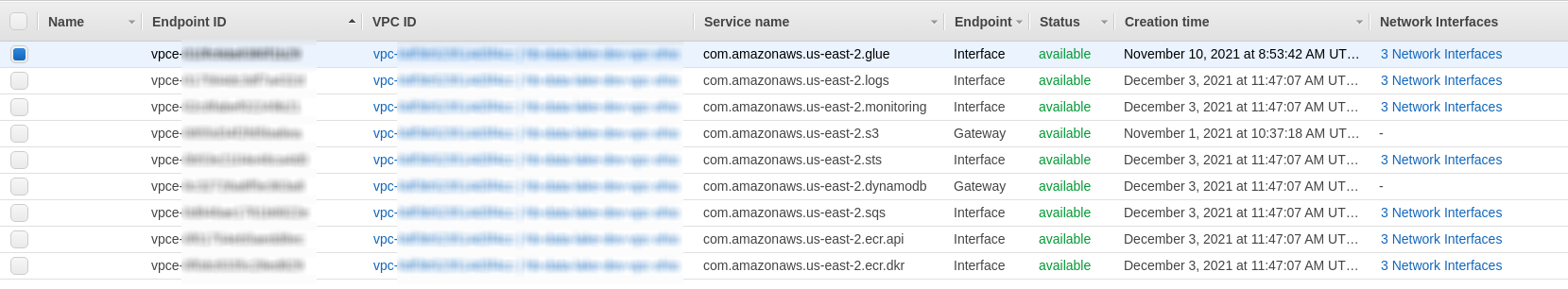

I found the issue I deployed one parallel s3f2 stacl, but with VPC. And compared endpoints and SG. Seems that SG must have ingress https port to VPC CIDR range. |

|

Hi, thanks for opening an issue.

|

|

Hi,

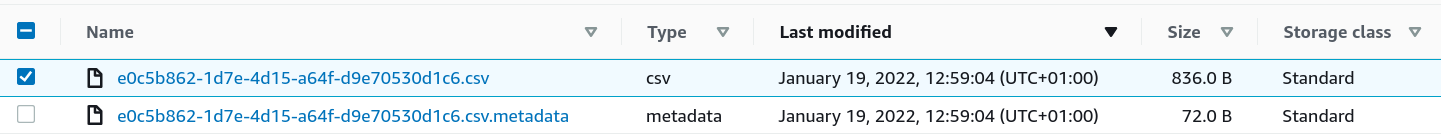

Encryption in that bucked is not with Customer managed KMS keys, I was also disabled SSE-S3 just for troubleshooting. In Athena dashboard I noticed that Athena was successfully executed, and I think files were saved to the bucket. S3 endpoint was in place. |

Hello,

I had successfully deploy, tested this solution and it worked. Version 0.42

Now with the same stack Status is "Running" for a whole day.

And I found out that ECS DelStack tasks are failing with this error

I have no idea how to troubleshoot.

I have all VPC endpoints in place, with attached private subnets, the same one sent as a parameter to the CF.

Also Security Group is the same "default" one, attached to ECS Service, and also to all of these endpoints.

Here are the parameters I'm using, almast all default.

This is Terraform code, but you can see the parameters

Also, different minor issue, I wanted to use my own AthenaWorkGroup, but I was not able to set bucket permissions.

Tried with both roles, Athena role and that one another deployed with CF.

And yes, one important thing.

I don't see how to stop Deletion Job. It runs 24h, I see this error, for sure it will fail, so it would be better to have some option to cancel complete job

The text was updated successfully, but these errors were encountered: