During Google I/O, the tech giant’s developer conference, they revealed Project Gameface. Project Gameface is designed to allow players to control the computer’s cursor (and keyboard) with, you guessed it, nothing but their face.

In a video showing the technology in action, we hear from Lance Carr. Lance is a disabled gamer who lost their adaptive equipment, an expensive head-tracking mouse, in a house fire. This prompted Google to work together with Lance to design Project Gameface.

Project Gameface is a piece of software that recognizes head movements and facial gestures with a normal webcam. As such it does not rely on often expensive head tracking equipment. Google MediaPipe, an open source set of tools and libraries to easily apply Artificial Intelligence (AI) to projects powers this project.

Actions and gestures

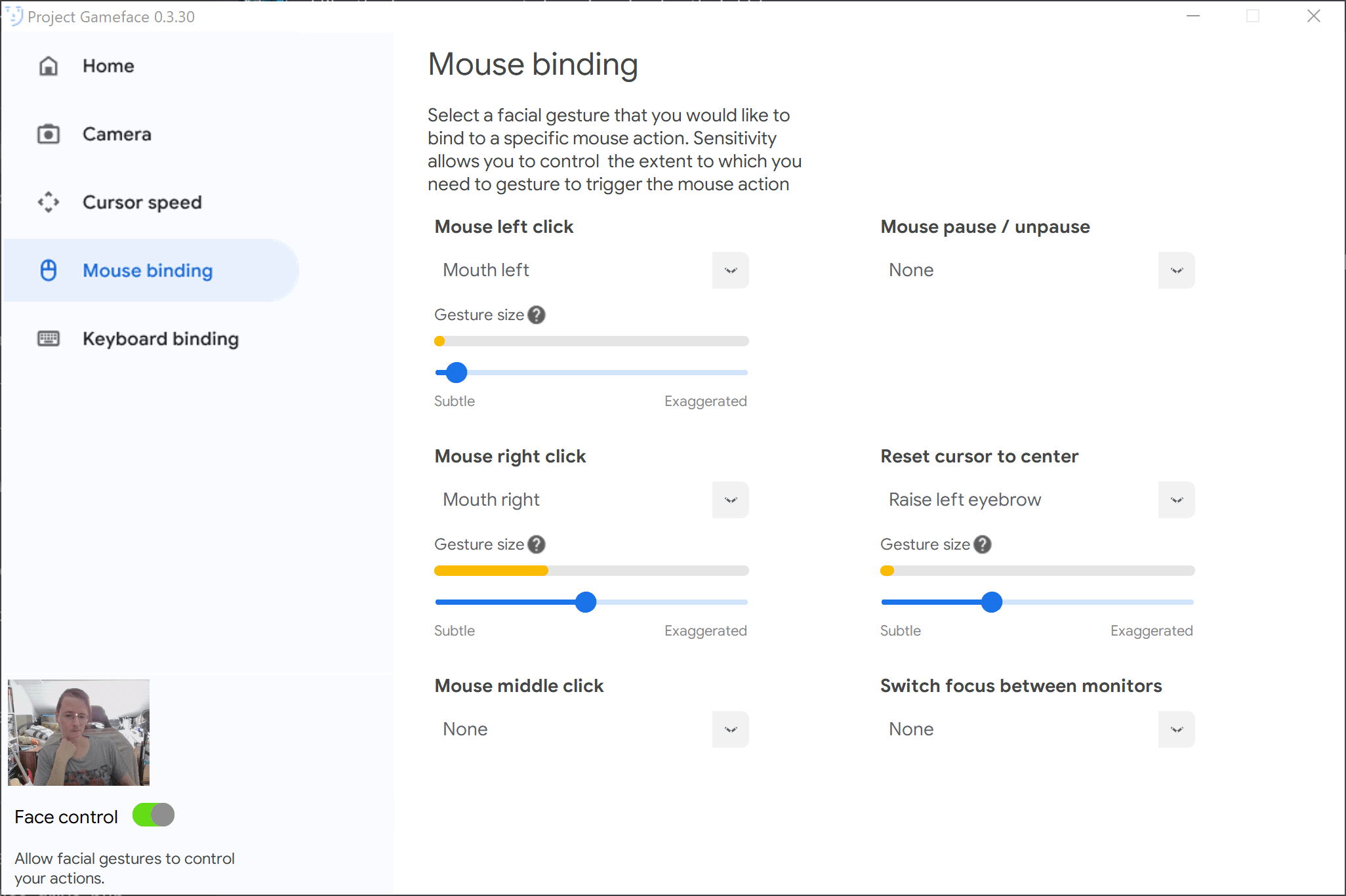

With the current release build of Project Gameface only a few actions and gestures are available, but the source code implies a lot more is possible. You can set the following actions under mouse controls. Mouse left, right, and middle click. Pause / unpause mouse control, reset cursor to center, and switch focus between monitors.

Aside from mouse control, it is also possible to assign keyboard actions to gestures. Ironically, the Escape button can’t be assigned, as pressing it cancels the key selection. The gestures available now are: Open mouth, move mouth left / right, raise left / right eyebrow, lower right eyebrow. The source code does define over 50 possible gestures so I expect this list to grow.

Testing it out with a facial difference

Project Gameface is open source and available for download, so I decided to test out the current release. As a person with a facial difference, my experience with anything facial recognition is spotty at best. Especially when it concerns tracking of movement. Project Gameface is no different, but it is possible to adjust the detection sensitivity of gestures.

This sensitivity adjustment turned out to be a necessary feature. It shows the detected gesture size similarly to how you often see microphone volume displayed. It fills a bar depending on how much the gesture is detected. Turning from yellow to green when over the trigger threshold.

Setting the left click to moving my mouth to the left needed the setting all the way to “subtle”. Even then I needed a quite exaggerated movement to trigger it. In contrast, it constantly detected mouth movement to the right, which needed resolving with setting that to more “exaggerated”. And that still occasionally detected clicks without movement on my part. This tells me the models still need training with much more diverse faces, hardly a surprise for those who encountered these issues before.

For now, Project Gameface is definitely something that can already benefit many people. Having it work with any webcam allows for an affordable solution for accessible control, provided the user has good control over their head and facial movements.