Ars Technica Videos

- Go to ArsTechnica.com

-

Subscribe to 'Ars Technica' on YouTube to keep up with all of our latest videos and shows.

When autocomplete results are available use up and down arrows to review and enter to select.

The Lightning Onset of AI—What Suddenly Changed? | Ars Frontiers

Our panel discussion 'The Lightning Onset of AI—What Suddenly Changed?' from the Ars Frontiers 2023 Livestream

Released on 5/23/2023

Transcript

00:00

[upbeat music]

00:09

Oh, hi. I didn't see you there.

00:11

Welcome to my office.

00:12

I'm Benj Edwards, a large language model,

00:15

otherwise known as Ars Technica's AI

00:17

ans machine learning reporter.

00:19

And you're watching the lightning onset of AI.

00:21

What has suddenly changed?

00:23

While AI has been around since the 1950s,

00:26

recent advancements in generative AI systems

00:28

have enabled impressive creative feats,

00:30

such as writing, chatting, and creating images.

00:33

We've quickly gone from a world

00:34

where very few have heard of things like ChatGPT,

00:38

'cause it only came out six months ago,

00:40

to a time where the entire tech industry

00:44

is racing to integrate them into their products.

00:46

So, what we're gonna do today

00:48

is explore what exactly has happened in the past few years

00:51

to enable this apparent leap in technology.

00:55

And to help us answer those questions,

00:56

we've invited two very distinguished guests to our panel.

00:59

But before I introduce them,

01:00

I wanna give a quick reminder to send any questions

01:03

for them to the comments on YouTube.

01:06

Okay. So, onto the panelists.

01:09

First, Paige Bailey is the lead product manager

01:11

for generative models at Google DeepMind.

01:15

She's in charge of the PaLM 2 model

01:17

and the new Gemini large language model under training.

01:20

Previously, she spent over a year at Microsoft

01:23

as director of machine learning and MLOps for GitHub,

01:25

working on GitHub code spaces and Copilot.

01:28

Welcome, Paige.

01:31

Thank you.

01:32

I'm really excited to be here

01:33

and can't wait to hear the audience's questions

01:35

and to learn more about what folks

01:37

are excited about for generative AI.

01:40

That's awesome.

01:41

And next, Haiyan Zhang,

01:43

the general manager of Gaming AI at Xbox.

01:47

She has over 20 years of experience in software engineering,

01:49

hardware R&D, and service design.

01:52

She's also the former host

01:53

of the BBC TV tech series, Big Life Fix.

01:56

Welcome, Haiyan.

01:57

Hey, Benj. Great to be here.

01:59

Great to be chatting with you and Paige as well.

02:01

Awesome.

02:03

It's great to have both of you here.

02:04

Now, I'll just get to the questions.

02:07

Since we're gonna be discussing AI and it's a nebulous term,

02:11

I wanna take just a minute,

02:12

maybe in a sentence, could you tell me what AI means to you?

02:17

Like what's the definition of AI?

02:18

Paige, do you wanna do it first?

02:21

Sure, I'd be happy to.

02:22

So, in general,

02:24

I know that there's been a lot of hype

02:26

around like what is AI,

02:28

how is AI different than machine learning,

02:31

is it an evolution of machine learning?

02:33

And really, I like to think of AI

02:35

as helping derive patterns from data

02:39

and use it to predict insights.

02:42

So, you can think of AI as just a way

02:46

to take the wealth of knowledge,

02:49

the wealth of data that's been collected over time,

02:52

and to be able to do really interesting things

02:55

like we've seen with models like PaLM 2

02:58

and with with GPT-4 to create text,

03:04

to generate code samples to explain code,

03:07

all those sorts of things.

03:09

So, really, it's not anything more

03:14

than just deriving insights from data

03:16

and using it to make predictions

03:18

and to make even more useful information.

03:23

Haiyan, what do you think

03:24

about AI? Oh, wow.

03:25

[Benj laughing] Yeah.

03:26

Building on Paige's description, I'd say,

03:28

so I focus on video games

03:29

and we think of video games

03:31

as the ultimate expression of human creativity.

03:34

It's an art form for people to experience.

03:36

And we see the transformation of AI

03:39

from using these algorithms, these models to analyze data,

03:45

to look for patterns in data, to classify data,

03:48

now evolving to having creative capabilities

03:52

both in language and image and code.

03:56

And we see these creative generative capabilities

03:59

having ultimately the really amazing tools

04:03

to bring out human creativity.

04:06

Yeah, that's awesome.

04:07

So, let's get to the title of the panel is what has changed?

04:12

So, what has changed that's led to this new era of AI?

04:15

Is it all just hype

04:16

or just based on the visibility of ChatGPT?

04:19

Or have there been some major tech breakthroughs

04:21

that gave us this new wave?

04:23

Maybe, Haiyan, you could start.

04:27

Yeah, I mean for us in Xbox, in Microsoft gaming,

04:31

we've been shipping AI for over a decade initially with

04:37

Bayesian inference algorithms

04:39

like matchmaking and skills ranking for players.

04:43

And now, in the last few years,

04:44

we've seen breakthroughs in the model architecture

04:48

for transformer models,

04:49

as well as the recursive auto encoder models

04:53

and also the availability of large sets of data

04:57

to then train these models

04:59

and couple that with thirdly,

05:01

the availability of hardware such as GPUs, NPUs,

05:06

to be able to really take the models, to take the data,

05:11

and to be able to train them in new capabilities of compute.

05:16

Yeah, wow.

05:16

Paige, what do you think?

05:19

Absolutely.

05:19

I think at Google,

05:20

we've been thinking about AI for a long time.

05:23

We've been a machine learning first company since inception.

05:26

And so, many of the great kind of capabilities

05:29

and breakthroughs that we've seen

05:30

in the generative model space,

05:32

the transformer architecture,

05:34

things like instruction tuning and RLHF,

05:37

reinforcement learning from human feedback,

05:39

were actually, pioneered many years ago.

05:42

So, I think DeepMind's first RLHF paper

05:45

and blog post was around 2017.

05:47

But really now, we've come to this place

05:50

where we have really compelling hardware like TPUs.

05:54

We have massive amounts of data

05:57

that we can use to train and to learn from.

05:59

And then, we also have this vibrant community,

06:03

I think now of open source tinkerers

06:06

that are open sourcing models, models like LLaMA,

06:11

fine-tuning them with very high quality instruction tuning

06:15

and RLHF data sets.

06:17

And really, most compelling to me

06:19

is they're able to eek out the same performance

06:22

that you might see from a much, much larger model

06:25

just by virtue of having a smaller model

06:27

fine-tuned on a very high quality dataset.

06:31

So, I think, this is what I've been waiting my entire career

06:36

to see honestly is we have

06:39

this kind of exponential increase

06:40

in the capabilities of models,

06:42

but even more importantly, making them efficient,

06:45

making them small and concise,

06:47

and helping unlock their capabilities

06:50

for not just the people

06:51

who can afford the most expensive GPUs,

06:54

but also anyone who's interested

06:57

in having these productivity and creativity helpers tools.

07:01

Yeah, that's amazing.

07:02

As you said that, I was gonna ask you about

07:05

if you think that the collaborations and open source,

07:08

open source machine learning platforms

07:11

and even sharing code and research,

07:14

has that been really important in accelerating things in AI?

07:18

Well, I think it certainly has in the sense

07:21

that many of the frameworks that we use

07:25

for training open source models

07:27

like PyTorch and Jaxon, TensorFlow,

07:31

they're all open source,

07:33

people sharing models and making them available.

07:39

Things like Hugging Face,

07:41

these things would've never been possible

07:43

without the great help

07:45

and the contributions of the open source community.

07:48

And really sharing best practices as well

07:51

has inspired teams to take new research directions,

07:55

which can unlock many of these capabilities.

07:58

So, I certainly do think

08:00

that this machine learning community is only in existence,

08:05

because people are sharing their ideas,

08:08

their insights, and their code.

08:10

And I hope that's something that will continue

08:13

as we move more into the world of generative models.

08:16

Yeah, that's amazing.

08:18

So, does Google have any plans

08:21

to do any kind of open source models

08:23

that you could discuss?

08:26

So, I do know that we've open sourced many of our models

08:29

and if you go to github.com/googleresearch/googleresearch,

08:35

you can see many of the papers

08:38

and sort of models and code that we've released.

08:41

I also encourage you to check out Hugging Face.

08:44

We partner quite closely with them.

08:46

And so, as often as possible,

08:48

whenever we release a paper at an academic conference,

08:50

we try to have some kind of demo publicly available

08:54

either through a website

08:55

or through sharing our insights on Hugging Face.

08:59

But I also,

09:02

I don't want to give away anything

09:05

that might be coming down the pipe.

09:07

So- Yeah.

09:09

Make sure to check out Google Research on GitHub.

09:12

That makes sense.

09:13

Now, Haiyan, from your point of view,

09:19

have the advancements in hardware improved

09:22

and contributed to these AI breakthroughs?

09:26

She mentioned TPUs,

09:27

which I think is mostly a Google kind of thing,

09:29

but there's a lot of work on the GPU side of things.

09:32

So, what do you think about that hardware's role?

09:36

I think Paige makes such a great point

09:39

in that when people play video games,

09:44

it is either through a console in their house

09:48

or through their PC or through cloud streaming.

09:52

And in all of these experiences,

09:54

we want these to be consistent, to be best in class,

09:57

both in terms of the rendered quality of the games,

10:00

the AI that powers the games.

10:03

And so, we need to be looking at, hey,

10:05

how do we make sure that the AI in the future

10:08

as it runs in these games, performs consistently

10:11

across lots of different kinds of hardware?

10:15

Mm. Yeah.

10:16

How long do you think we can stick a generative AI model

10:18

in a game console?

10:20

Like [mumbles]

10:21

I'm very excited about that.

10:22

But I do think it will be a combination of working

10:27

for the AI to be inferencing in the cloud

10:30

and working in collaboration with local inference

10:33

for us to bring to life the best player experiences.

10:37

Wow. Right.

10:38

I also encourage you to that point there,

10:42

as a result of the LLaMA models being open sourced,

10:46

there were some people in the ML community

10:49

that were able to bring it down

10:51

to 1 billion parameters in size

10:54

and actually put it on a mobile device.

10:56

So, it's really just mind-boggling

11:00

to think of how cool it is

11:02

that you can have your own personal large language model

11:05

on your mobile device such that you can ask it questions.

11:09

The data stays on device,

11:10

so there's no need to ping an API.

11:14

And they're getting better and better each day.

11:18

I would love to have a hyper personalized

11:21

large language model running on a mobile device

11:23

or running on my own game console that can

11:29

perhaps make a boss

11:30

that is particularly gnarly for me to beat,

11:35

but that might be easier for somebody else to beat.

11:38

Oh, Paige- Right.

11:39

Oh, sorry.

11:41

Yeah, go on, Benj.

11:42

I was just gonna say, Paige,

11:43

if it runs locally on your device, does that cut Google out?

11:47

Where does that leave room for PaLM like in the cloud,

11:51

if everybody's running

11:52

their own open source model on a phone?

11:54

So, that's a great question.

11:56

I do think that there are probably,

11:59

there's probably space for a variety of options.

12:02

Like of course, there are great APIs

12:06

from many different companies.

12:07

So, from Google, from OpenAI,

12:11

from Hugging Face and Cohere,

12:14

and several others also have paid service API options.

12:18

But it might be that some companies

12:20

want additional data privacy

12:22

or they want to be able to have custom fine-tuned models.

12:26

And I think there should be options available

12:29

for all of these things to coexist meaningfully.

12:34

And as we've seen, I think generative models

12:38

have captured the attention

12:39

and the hearts of most people that use them.

12:43

And I don't think there's going to be any shortage

12:46

of use cases for generative models long-term.

12:50

So, making sure that there are a spectrum of ways

12:53

that people can leverage their capabilities

12:58

is going to be quite important.

13:01

Haiyan, did you have any thoughts about that?

13:03

Well, I was gonna say,

13:05

I think I'm super excited by all of the new AI technologies,

13:13

new generative models that are being developed.

13:16

I think some of the work that I do is really focused on,

13:19

hey, we've got all this crazy amazing new tech,

13:22

how do we turn them into tools

13:24

for game creators to bring their imagination to life?

13:29

And I think that translation layer between

13:33

the raw technology and the raw APIs and the tools of people

13:37

who are not necessarily wanting to drill into the APIs

13:41

and the tools themselves,

13:42

but are more like, hey, I wanna bring this vision,

13:45

this artistic vision of mind to life,

13:46

how do we make the tools available

13:48

to everybody to be able to do that?

13:50

And that translation, that layer needs a lot of work.

13:53

So, we see a lot of crazy demos,

13:57

really great samples out there.

14:00

When we look at them,

14:01

I think some of the work that the generative AI models

14:06

are doing can work in one instance and not another instance.

14:10

We call that kind of, it's a brittle demo.

14:13

It's like a great demo for a one-off,

14:15

but if you are gonna roll out a game

14:17

to 100 million, 200 million players,

14:19

we need that AI to work every single time on every endpoint

14:24

that the player is accessing that experience on.

14:27

And so, I feel like that is kind of the work

14:29

that I'm really passionate about.

14:31

Yeah, that's amazing.

14:33

So, since we're talking about the lightning onset of AI,

14:38

I'd be remiss if I didn't talk

14:39

about some of the risks maybe.

14:41

I know you're both socially conscious people.

14:43

How do you feel about any social risks

14:45

from AI systems like misinformation

14:48

or making factual mistakes

14:49

or deep fakes or anything like that?

14:52

Is that something that's on your mind?

14:54

So,

14:57

at Google, we care very deeply

15:00

about making sure that the models

15:01

that we produce are responsible

15:03

and that behave as ethically as possible.

15:06

And we actually incorporate our responsible AI team

15:09

from day zero whenever we train models

15:12

from curating our data,

15:15

making sure that the right pre-training mix is created,

15:18

to also making sure that we're asking the right questions

15:22

through the model development process

15:23

and also through deployments and fine-tuning.

15:26

So, I do think that

15:30

there is significant risk for

15:35

models to be misused

15:37

in the hands of people that might not necessarily understand

15:43

or be mindful of the risk.

15:45

And that's also part of the reason why sometimes,

15:48

it helps to prefer APIs

15:53

as opposed to open source models.

15:57

As I mentioned before,

16:01

the APIs that we produce from Google

16:04

go through very, very rigorous responsible AI filtering

16:08

from T equals zero.

16:11

If you are adopting an open source model,

16:16

you and your team have to be mindful

16:18

of all of those constraints and risks yourself.

16:21

And to make sure that you're asking the questions

16:24

through the deployment process

16:25

to make sure that the models are used responsibly.

16:29

I do think though that there are great tools

16:32

and lots of wonderful research being done

16:34

to help us understand how to better use

16:36

and responsibly use models.

16:39

And that this field of work

16:42

is only going to increase importance over time.

16:46

So, that is something

16:48

that I am really honestly very delighted

16:52

and heartened that Google cares deeply about,

16:56

and something that we all have to be mindful about

17:00

as machine learning practitioners.

17:02

And, Haiyan, I know Microsoft also does a lot of work

17:05

in the AI ethics and responsible AI space,

17:08

Kate Crawford and her team.

17:10

So, I mean, Paige, I love we're having this conversation.

17:15

To me, I think there's a few different facets

17:16

of responsible AI and our work

17:20

to make sure everybody's included in that conversation.

17:23

So, firstly, I think generative AI

17:26

has this incredible potential to make our existing games

17:30

and features more inclusive and more accessible.

17:33

So, things like, hey,

17:34

how can we make games adaptable to your skill level

17:37

or your particular set of capabilities?

17:39

So, these are some of the areas we're looking at,

17:41

hey, how can we make games way more accessible

17:44

to everybody no matter what kind of controller they're on,

17:47

no matter what kind of abilities they have.

17:49

The second piece is,

17:51

when we think about incorporating AI into games

17:54

or into our gaming platform, how do we make sure that AI,

17:58

those new AI products and features are inclusive?

18:01

And that means working with communities.

18:03

So, we have great programs inside of Xbox,

18:07

like our Xbox Ambassadors

18:09

that we reach out and we talk about, hey,

18:11

how do we make sure that this is inclusive

18:13

of all different communities,

18:15

folks from different cultural backgrounds.

18:18

And then, I think the third piece, you are right,

18:20

is responsible AI at its core is something

18:23

that we care very deeply about as Microsoft

18:26

and also as Xbox,

18:27

some of the conversations we have between gaming

18:30

and the core responsible AI team

18:32

is that I think our needs for responsibility

18:36

are going to be different,

18:37

because for each industry,

18:38

you are going to have different keywords,

18:40

different kinds of filters, different scenarios.

18:42

Imagine the game,

18:43

all the morally ambiguous choices you are making

18:47

and potentially using AI as your support system.

18:50

And how do we make sure that the AI features

18:54

we roll out in video games still have that same,

18:58

those AI tools available

19:00

to make sure that they are transparent, inclusive, safe,

19:04

while at the same time, understanding that those scenarios

19:06

are, I'm taking over an alien planet,

19:09

[Paige and Benj laughing] I'm taking my spacecraft

19:11

to Mars and I'm gonna terraform.

19:13

How does the AI really understand these new moral scenarios

19:17

that we can experiment within games?

19:20

Yeah. That's awesome.

19:21

Yeah, that makes sense.

19:22

Let me, unfortunately, we don't have a lot of time,

19:24

so I have to transition to the audience questions now.

19:27

Those are great answers.

19:29

I see two really big questions right off hand.

19:33

This is like, [sighs] it's on everybody's mind.

19:36

So, Tim asks, How do we put fears of AGI to rest?

19:41

And, Paige, do you wanna go first?

19:45

So, I think that's a really interesting question

19:51

and AGI is

19:54

something that if you ask five different people

19:58

what their definition of AGI might be,

20:01

you'll probably get five different answers.

20:03

If you view AGI as creating a model

20:08

that is generally useful at a broad variety of tasks,

20:11

so capable of doing many things,

20:13

whether it's generating text,

20:17

generating code, understanding images,

20:20

generating videos,

20:22

I think that we can see

20:26

how that might be a productivity enhancement,

20:29

a creativity enhancement.

20:32

But again, that's only if we start incorporating

20:37

these responsible AI features and roadblocks

20:40

and ensure that we use models responsibly.

20:44

I think, from my perspective, AGI is still something

20:52

that's ill-defined

20:57

and if we're just talking about

21:00

creating a generally useful model,

21:02

we're close to being there,

21:05

But AGI, it's viewed in science fiction scenarios,

21:11

I think

21:14

is still

21:16

a long way off if ever

21:18

and

21:21

is something that we should be mindful about as an industry

21:24

and start building processes.

21:27

I know also there's been a lot of discussion,

21:31

especially recently around things like AI regulation

21:35

and also making sure that companies

21:38

who are building these AI systems are behaving responsibly,

21:43

especially as they're building the largest models.

21:46

And hopefully that's something that will be used

21:50

as a way to build muscle in this space soon.

21:54

Though, of course,

22:00

that's above my field of expertise in order to enable.

22:06

But I do think that as an industry,

22:10

we need to get a little bit more crisp

22:12

about what is the definition that we have of AGI,

22:15

and then also encourage lawmakers and policymakers

22:22

to adopt new standards

22:24

and things that might help

22:26

ensure that we're building AI systems responsibly.

22:30

Mm. Haiyan, what about AGI super intelligence people?

22:34

I think most people are afraid of losing their jobs

22:36

and some people are afraid

22:38

of it taking over the earth and destroying civilization.

22:41

What do you think, Haiyan, is that in the cards?

22:45

Well, I hope not, Benj. [Paige laughing]

22:47

Firstly, I wholeheartedly agree with Paige

22:50

and I encourage us to have this conversation

22:53

out in the public's sphere

22:55

about the impact and future ramifications,

23:01

future implications of AI on our society.

23:05

What do we want our society to be?

23:08

What do we wanna focus our talents and efforts on?

23:11

And how can we bring in AI

23:13

to support us in the things we wanna do as humans,

23:17

as people working together in a community?

23:19

And I love the direction we're going

23:22

with talking about AI regulation,

23:25

what kind of rules do we wanna

23:27

and safety balances do we wanna put in place?

23:33

And I think interestingly, you mentioned,

23:37

for many decades,

23:38

the earliest interaction that people have had with AI

23:42

has been through game playing.

23:45

The first AI algorithms were really chess computer programs.

23:51

We've got Garry Kasparov being defeated by Big Blue.

23:56

We've got AlphaGo playing against Lee Sedol.

24:01

And even though these were simple algorithms, simple models,

24:05

not so simple when it comes to the research,

24:07

but they did one thing really well.

24:10

But you can see when people play,

24:12

when they play in these experiences,

24:14

they can't help but project personality.

24:17

When you play Pac-Man, those ghosts are chasing you,

24:20

you are cursing those ghosts as if they were alive.

24:23

You are projecting humanness personality

24:27

onto these artificial beings,

24:29

which are just rules-based algorithms.

24:32

So, I think in a way, we can't help but project that,

24:36

hey, I see this AI tool doing these amazing things

24:40

and thinking that it has more general intelligence

24:45

and liveliness than I think, under the hood, it really has.

24:51

So, I do think we have a long way to go to AGI.

24:54

I think we are starting those conversations now.

24:58

And also, I love, Paige talked about science fiction.

25:02

How can we explore these scenarios

25:04

in science fiction in video games?

25:06

How can we explore AI and video games

25:09

to help better inform how we feel about that as a society?

25:13

Yeah. And that was awesome.

25:16

And I also want to just ease people's thoughts a little bit

25:23

in terms of having generative models take away jobs.

25:27

If anything, I think it's going to be creating more jobs

25:30

and empowering us all to be more productive

25:33

and creative at our current workplaces.

25:36

There's a lot that I do every day

25:38

that's a little bit mundane.

25:40

It feels repetitive.

25:41

Having something that could take ownership of that,

25:45

like having a little grad student

25:47

as part of my own tiny research lab would be amazing.

25:50

And then, also finding ways to unlock new careers.

25:56

Flights, whenever planes were first created,

26:00

they created jobs for pilots,

26:02

for flight attendants, for people to work at airports.

26:06

It is clear that that generative models

26:08

are going to be more of the same.

26:10

So, instead of taking away jobs,

26:14

it will just make us all more delighted

26:17

at the places that we currently work,

26:19

and then also unlock the potential for many new roles.

26:23

That's awesome. Yeah, that makes sense to me.

26:26

One more question, this is a prickly one

26:28

and we only have about two minutes left.

26:29

So, how do you feel...

26:32

Let's see who put this question.

26:35

NotNoel said,

26:36

Are you concerned about AI models

26:39

that are trained using public data without consent?

26:42

Like from creators,

26:44

like scraping stuff from the internet

26:45

that's feeding these big models.

26:47

How do you feel about 'em? Is it ethical?

26:50

Is it something you're working to change?

26:54

Try to answer quickly. [laughs]

26:58

So, I can give an example of something

27:01

that we did recently at Google as part of our Bard project.

27:07

We introduced the concept

27:09

of recitation checking within the tool.

27:12

So, first off, the models that we train

27:15

are on publicly available data, publicly available code.

27:19

So, nothing outside of the realm of anything

27:24

that you would see if you went on google.com

27:26

and did a search.

27:28

And then, for the

27:31

sort of questions that folks ask on Bard,

27:34

if there's any code that they generate

27:38

that's a portion of which might be in a GitHub repo

27:43

or in another place where public source code

27:46

is stored on the internet,

27:48

will actually have a URL back to the source,

27:52

such that it gives attribution back to the author,

27:55

and then also calls out what license was used

27:58

in addition to that source code.

28:00

So, you can see if it's Apache 2.0

28:02

or if it's something less permissive like GPL.

28:05

But more importantly,

28:07

it's lending this idea to author accreditation.

28:12

And one thing that made me particularly delighted

28:14

was being able to see new projects

28:19

that I might not have discovered otherwise

28:20

when I was asking the model to accomplish a task.

28:25

It can point me to a function

28:26

that's already been implemented

28:27

as opposed to me trying to hash it out myself.

28:31

So, I do think there are ways

28:33

to introduce credit attribution,

28:36

and then also to be mindful

28:39

and to only include data that the authors

28:41

have explicitly listed

28:42

under a permissive license for your pre-training mix.

28:46

Yeah.

28:47

Haiyan, we're out of time,

28:48

but I want you to have a chance to answer that real quick,

28:51

'cause it's only fair.

28:52

Oh, thanks, Benj.

28:53

Well, you can see Yeah.

28:54

with the Bing Chat product,

28:57

the idea of attribution and really making sure

29:01

that all the information being surfaced in that chat

29:04

is grounded in actual sources across the internet

29:07

is baked into the product from day one.

29:09

So, if you go to Bing Chat,

29:10

you ask it a question, it summarizes answers for you,

29:13

it gives you answers,

29:14

but it's always attributing back to the pages,

29:17

the sources that it came from.

29:18

And I think that is core

29:20

to how we think about product development

29:22

with generative AI,

29:23

with these variations of GPT models

29:26

that we do think about that inclusion

29:29

and making sure every creator,

29:30

every contributor is supported

29:34

and feels that their content is being respected.

29:39

Yeah. Thank you.

29:40

Well, that's all the time we have for today.

29:42

Thank you so much, Paige and Haiyan,

29:44

for taking the time to be here.

29:45

It's been awesome. Now, back to Ken and Lee.

29:51

That was fantastic. Thanks, Benj.

29:54

He'll be joining us shortly. Yeah.

29:55

That last question is actually one that is super important

29:59

Mm. and I think

30:01

as much as attribution is important,

30:03

I still worry about who's gonna be left

30:07

to incentivize to create the things that get attributed.

30:09

So, Benj.

30:11

Hey. Oh, I'm surprised

30:12

to see you there

30:13

over my left shoulder. [Lee laughing]

30:15

Lemme get in the center of this big old TV.

30:17

There you go. [Ken laughing]

30:18

Yeah.

30:19

So, listen-

30:20

I'll be in here.

30:21

Thanks. This was great.

30:22

But

30:24

there's a danger I think in anthropomorphizing AI.

30:29

You guys talked about AGI, which to me,

30:32

will always be like the Sierra game engine from the '80s,

30:34

but I know it's...

30:35

What does AGI I stand for these days?

30:37

The AGI that we're afraid of.

30:38

Artificial general intelligence.

30:40

There you go. Right.

30:42

And there is a danger to treat AI as if it were human

30:46

and has human type perceptions.

30:48

And I think that's probably one of the,

30:49

something that's baked into us as people.

30:51

Because when Ken and I are speaking,

30:53

we're keeping eye contact, I'm looking at you,

30:55

we're helping each other along with the conversation.

30:57

AIs don't approach conversation in the same way.

31:00

AIs don't really do conversation really.

31:03

This is uncharted weird territory.

31:07

Yeah, I think we have an adjustment period

31:09

where we're confronted with an entirely new type of thing

31:14

and we have to build the metaphors to understand it

31:18

and the structure and the knowledge.

31:20

And I'm sure when some other new technology came out like,

31:25

I don't know, a typewriter or something,

31:27

people probably looked at it and said,

31:28

what do you do with this thing?

31:29

What does it mean for everything?

31:31

And that period may have taken a few decades,

31:34

I don't know. That was in the 1800s.

31:36

But I think as far as AI is concerned,

31:39

we're just at the very beginning of generative AI.

31:43

And we may get so bored with it that,

31:46

and it becomes so mundane that we don't think,

31:49

oh, this is magic people talking to us from a computer.

31:52

It's a tool, a creativity tool

31:55

and a text and information processing machine.

31:57

So, who knows.

32:00

In a lot of ways, it feels like this is fulfilling,

32:02

at least at a surface level,

32:03

the promise that a lot of tech companies have been making

32:06

for decades and decades about how like one day,

32:08

you'll have an AI assistant in your pocket

32:10

and it will be able to do this and that and that.

32:12

In a lot of ways,

32:13

we got that with the assistance

32:14

in our smartphones and stuff.

32:15

But this is beyond that.

32:17

This is maybe a little closer to doing that, would you say?

32:20

Yeah.

32:23

It's been foretold in sci-fi for so long

32:26

that we'll have some kind of intelligent agents

32:28

or intelligent assistance.

32:31

And so, when we see this coming,

32:33

something that even looks a little bit like it,

32:35

it just rings a lot of alarm bells for people.

32:38

'Cause we live by popular culture.

32:40

It cements us all together.

32:42

So, it's like everybody says, is sci-fi becoming real?

32:47

In a way, yes,

32:48

but the actual dimensions of what's actually gonna happen,

32:54

no one can fully predict that at the moment.

32:56

We have good guesses,

32:58

but we just have to adjust as a society,

33:00

I think, to these new technologies.

33:03

Yeah.

33:03

What is your dream application for generative AI?

33:08

Oh, man, that's a good one actually.

33:10

I have all these cassette tapes.

33:13

I used to be a musician

33:14

around a music website a long time ago, 20 years ago.

33:18

And I used to record all these song ideas on tapes.

33:22

And boy, would I love

33:23

to run those cassette tape things through

33:26

and make it produce a fully produced song

33:30

with my voice and everything.

33:31

I'd love to hear that music fully realized

33:34

and basically have my own artificial band to make it happen,

33:38

because I would never have the time

33:39

to do that myself anymore.

33:41

It's possible. Yeah.

33:42

So, man, that would be cool.

33:43

That's a great-

33:44

Thank you, Benj.

33:45

Yeah, thanks.

33:46

Thanks.

33:47

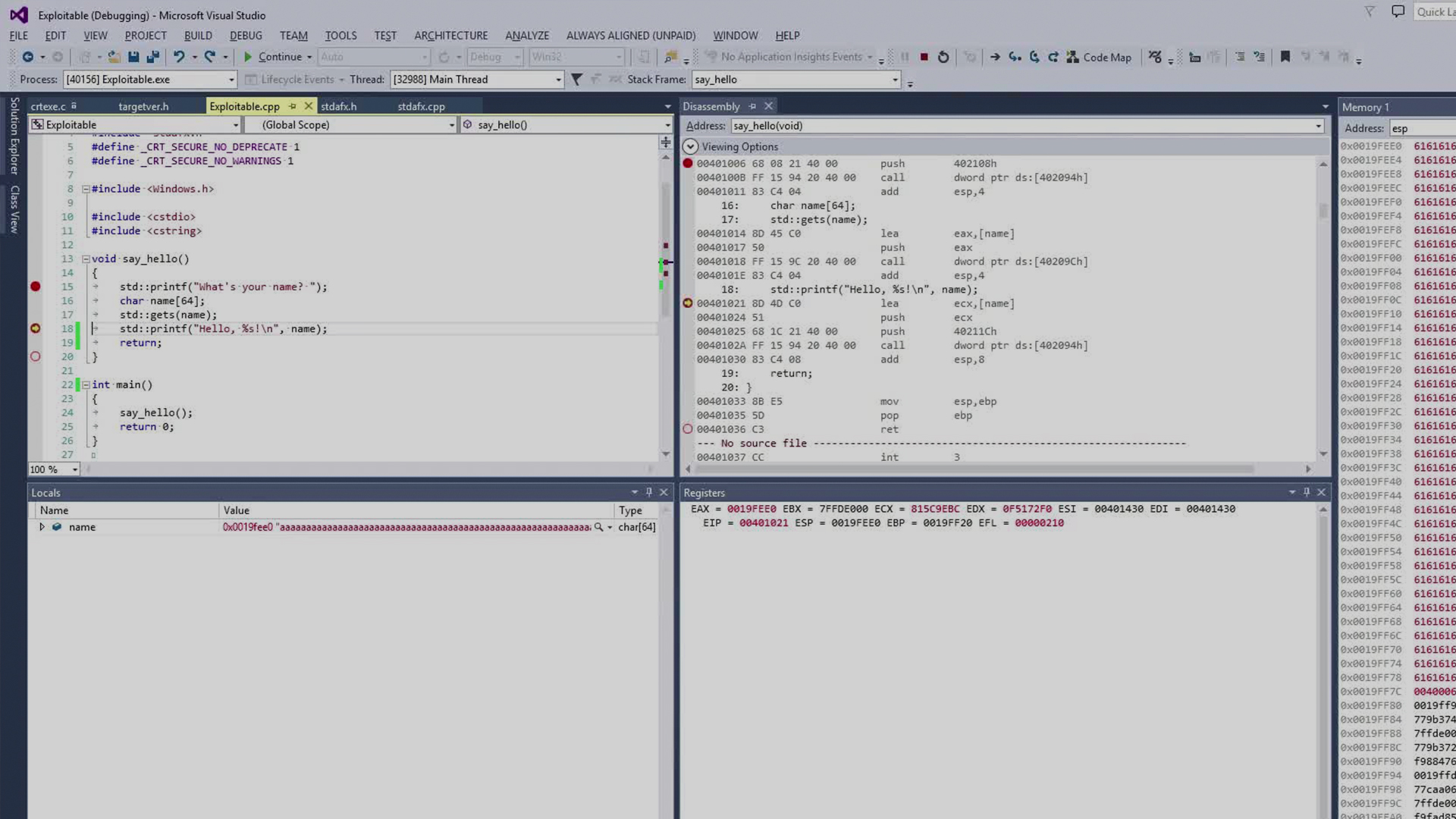

All right, so we are approaching our final panel.

33:52

And today, this panel will be talking

33:55

about what happens when AI is capable of coding.

34:00

We know at ours that this is a big concern.

34:02

So many of you are developers

34:06

and we've heard lots of great stories.

34:07

We've heard people really afraid of stuff.

34:10

To take us there

34:11

is going to be our own Lee Hutchinson right here.

34:15

Take it away, Lee.

34:16

Okay.